During this course, you’ll explore transformers, model frameworks, and platforms such as Hugging Face and PyTorch. You’ll begin with a general framework for optimizing LLMs and quickly move on to fine-tuning generative AI models. Plus, you’ll learn about parameter-efficient fine-tuning (PEFT), low-rank adaptation (LoRA), quantized low-rank adaptation (QLoRA), and prompting.

In this module, you will be introduced to Fine Tuning. You’ll get an overview of generative models and compare Hugging Face and PyTorch frameworks. You’ll also gain insights into model quantization and learn to use pre-trained transformers and then fine-tune them using Hugging Face and PyTorch.

Learning Objectives

Framework for Deep Learning:

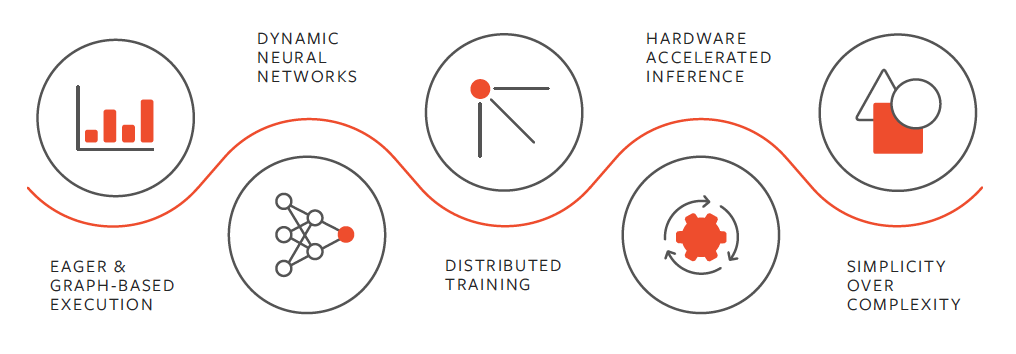

PyTorch is an open-source deep learning library developed primarily by Facebook’s AI Research (FAIR) group. It is used to build, train, and deploy neural networks. Its dynamic computation graph and ease of debugging make it a favorite among researchers and practitioners.

Low-Level Flexibility:

With its tensor operations, automatic differentiation (autograd), and a rich set of APIs, PyTorch gives users fine-grained control over model design and training processes. This makes it highly flexible for custom model implementations and novel research.

Ecosystem and Community Hub:

Hugging Face started as a chatbot company but shifted its focus to become a central repository for state-of-the-art models, particularly in natural language processing (NLP). Its ecosystem now includes tools for NLP, computer vision, and more.

High-Level Libraries:

The company is best known for its open-source libraries, such as Transformers, which offers a vast collection of pre-trained models (BERT, GPT, T5, etc.), and Datasets for streamlined data processing. These libraries are built on top of frameworks like PyTorch and TensorFlow, abstracting many complexities involved in model training and deployment.